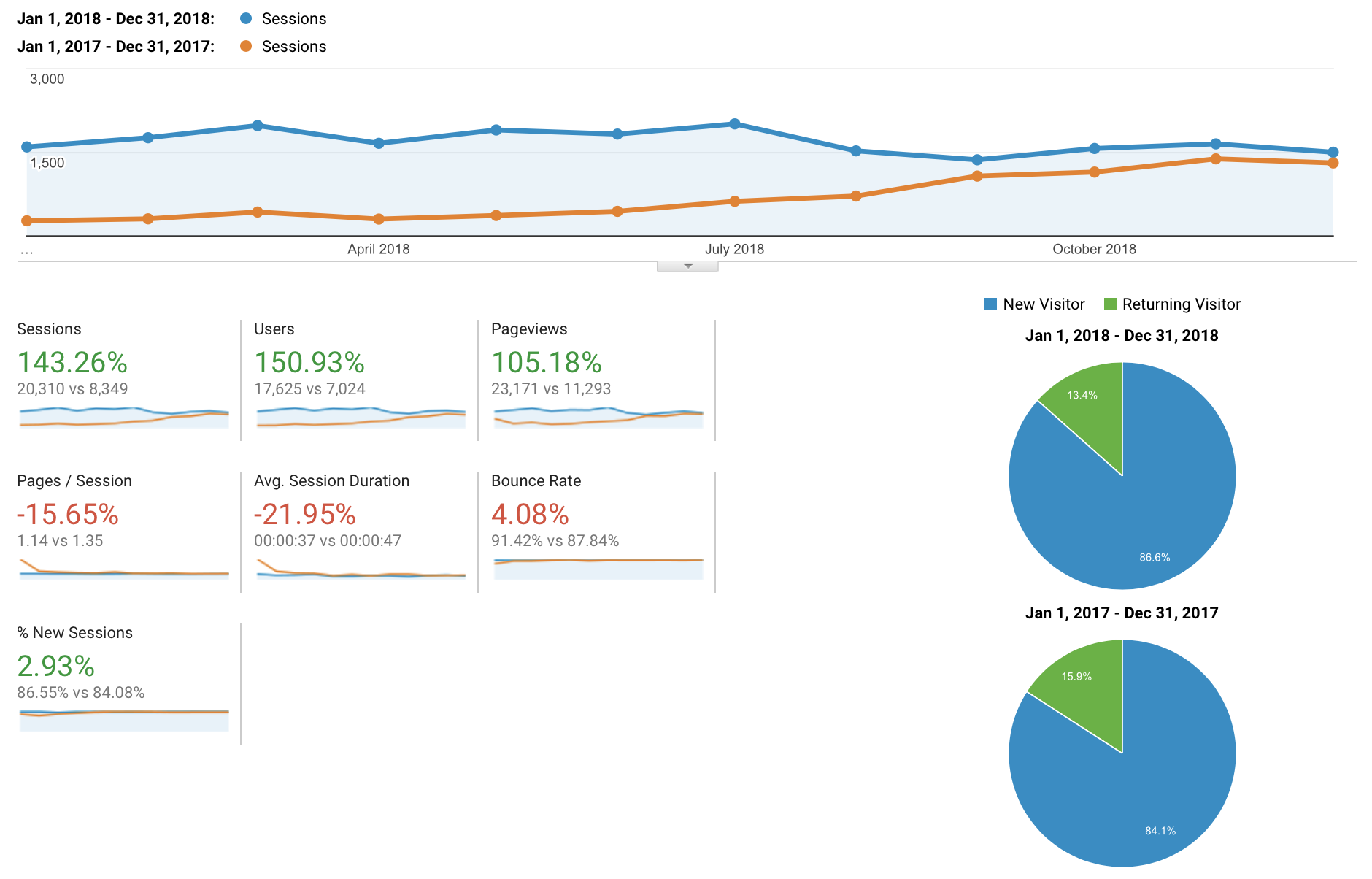

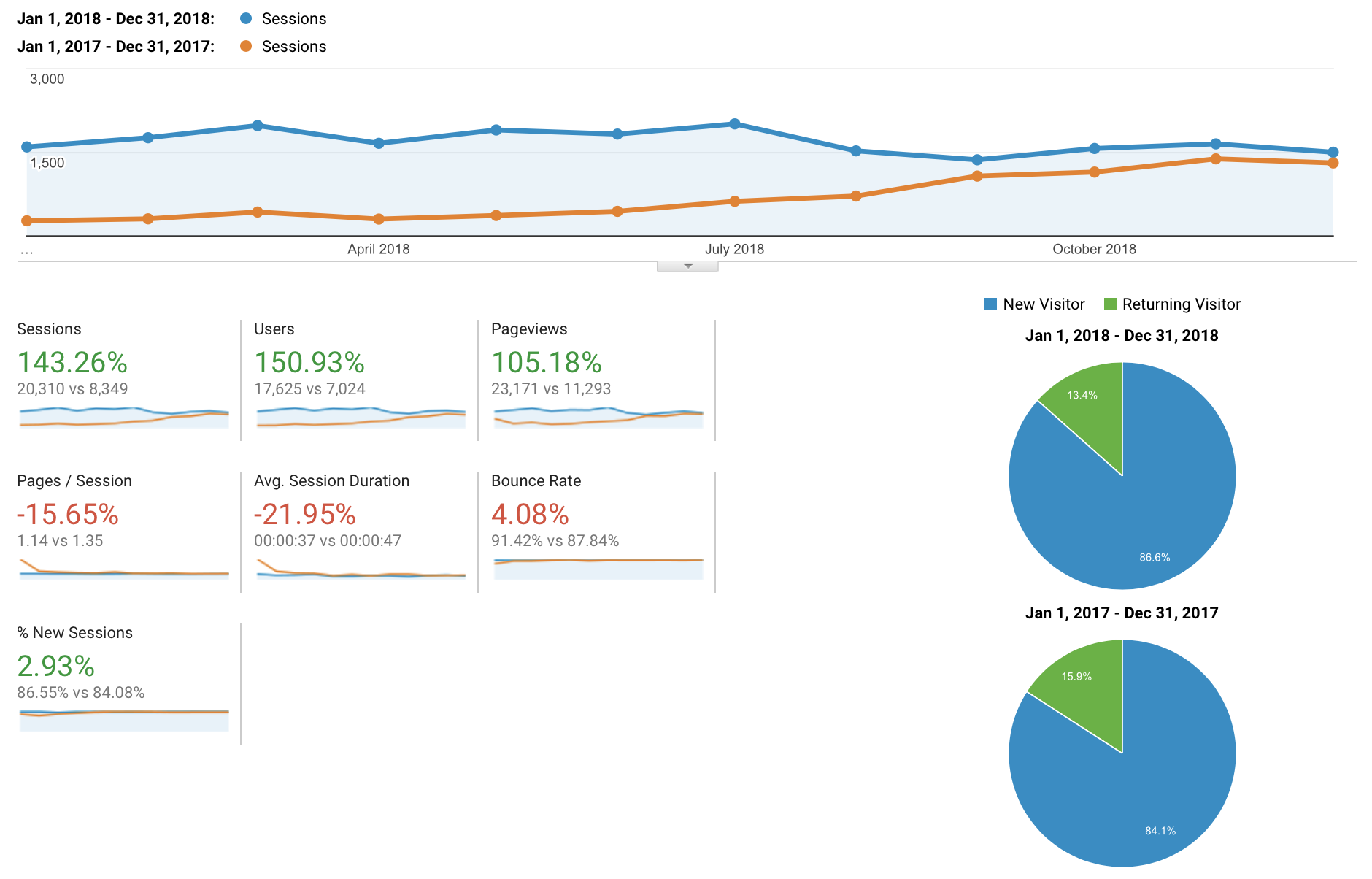

The goal was the same as in 2017 to write 52 posts, one per week. It’s not achived, the are 32, less than 45 in 2017.

The goal was the same as in 2017 to write 52 posts, one per week. It’s not achived, the are 32, less than 45 in 2017.

The command to get document size is Object.bsonsize. The next query is to find the document in a small collection, cause it can be slow:

db.getCollection('my_collection').find({}).map(doc => {

return {_id: doc._id, size: Object.bsonsize(doc)};

}).reduce((a, b) => a.size > b.size ? a : b)

To do this faster with mongo mapReduce:

db.getCollection('my_collection').mapReduce(

function() {

emit('size', {_id: this._id, size: Object.bsonsize(this)});

},

function(key, values) {

return values.reduce((a, b) => a.size > b.size ? a : b);

},

{out: {inline: 1}}

)

From the MongoDB side the current connections can be found with db.currentOp() command. Then they can be grouped by client ip, counted and sorted.

var ips = db.currentOp(true).inprog.filter(

d => d.client

).map(

d => d.client.split(':')[0]

).reduce(

(ips, ip) => {

if(!ips[ip]) {

ips[ip] = 0;

}

ips[ip]++;

return ips;

}, {}

);

Object.keys(ips).map(

key => {

return {"ip": key, "num": ips[key]};

}

).sort(

(a, b) => b.num - a.num

);

The result will be like this:

[

{

"ip" : "11.22.33.444",

"num" : 77.0

},

{

"ip" : "11.22.33.445",

"num" : 63.0

},

{

"ip" : "11.22.33.344",

"num" : 57.0

}

]

Then if there are several Docker containers on client host, the connections can be found by netstat command in each of them.

Suppose there are several MongoDB replicas with ips starting on 44.55... and 77.88...,

the command to count all connections to the replicas is:

netstat -tn | grep -e 44.55 -e 77.88 | wc -l

It’s not mentioned in the docs for Flask-SocketIO that Eventlet has an option max_size

which by default limits the maximum number of client connections opened at any time to 1024. There is no way to pass it through flask run command,

so the application should be run with socketio.run, for example:

...

if __name__ == '__main__':

socketio.run(app, host='0.0.0.0', port='8080', max_size=int(os.environ.get('EVENTLET_MAX_SIZE', 1024)))

Suppose there is a large tasks.py file, like this:

@celery.task()

def task1():

...

@celery.task()

def task2():

...

task1.delay()

...

...

A good idea is to split it on the smaller files,

but Celery auto_discover

by default search tasks in package.tasks module, so one way to do this is to create a package tasks and import tasks from other files

in __init__.py.

__init__.py

from .task1 import task1

from .task2 import task2

__all__ = ['task1', 'task2']

task1.py

@celery.task()

def task1():

...

task2.py

from .task1 import task1

@celery.task()

def task2():

...

task1.delay()

...

The @csrf.exempt method does not work with Resource methods or decorators, it should be done on Api level.

Here is an example how to exclude resources from CSRF protection based on class:

def csrf_exempt_my_resource(view):

if issubclass(view.view_class, MyResource):

return csrf.exempt(view)

return view

api_blueprint = Blueprint('api', __name__)

api = Api(api_blueprint, title='My API', decorators=[csrf_exempt_my_resource])

Or for all resources:

api_blueprint = Blueprint('api', __name__)

api = Api(api_blueprint, title='My Private API', decorators=[csrf.exempt])

I like the idea about separation of business logic from models and views into services. There are more details in these slides from the EuroPython talk.

Usually in Flask-Admin for a new column a new method with @property decorator is added and the model becomes fat.

Also it’s not good to put something with queries in property. Another way is to put this function to the services and use column formatter in Flask-Admin.

def get_some_data(model_id)

"""Function in services."""

return RelatedModel.objects(model=model_id).count()

class MyModelView(ModelView):

column_formatters = {

'related_model_count': lambda view, context, model, name: get_some_data(model.id)

}

column_list = [..., 'related_model_count']

With docker-compose it can be done the next way

flask_app:

...

healthcheck:

test: wget --spider --quiet http://localhost:8080/-/health

celery_worker:

...

command: celery worker --app app.celeryapp -n worker@%h --loglevel INFO

healthcheck:

test: celery inspect ping --app app.celeryapp -d worker@$$HOSTNAME

Where /-/health is just a simple route

@app.route("/-/health")

def health():

return 'ok'

Here is a small script which compares the version of the running service and the version in the docker-compose file, if they are different it runs an update.

REDIS_VERSION=$(docker service ls | grep "redis" | awk '{print $5}')

REDIS_NEW_VERSION=$(grep -Po "image:\s*\Kredis:.*" docker-compose.yml)

if [ "$REDIS_VERSION" != "$REDIS_NEW_VERSION" ]; then

echo "update $REDIS_VERSION -> $REDIS_NEW_VERSION"

docker service update --image $REDIS_NEW_VERSION app_redis

fi

To avoid rebuild of images on each run there is a possibility to use Docker socket binding. It’s covered in the documentation, here is a more detailed example.

© 2025 Andrey Zhukov's Tech Blog.